SmartAB™ Wisdom #56: Why Elon Musk Finally Admits The Failure Of Autonomous Driving (AD)

About one year ago, I published a post entitled: “SmartAB™ Wisdom #49: The 2024 Death Knell To The Autonomous Driving Coffin?”. And I described to my readers how back in 1987, I attended the IEEE First Annual International Conference on Neural Networks in San Diego, California. One of the plenary speakers that year was a brilliant scientist specializing in computational neuroscience at Salk Institute – Terry Sejnowski.

In his fascinating talk, Terry boldly stated that the level of computational neuroscience hasn’t even reached the level of the bee. I still remember the laughter in the room. For some reason, the audience thought that Terry was joking. Yet he wasn’t. He asked us all to remember that:

No existing plane can dynamically modify its flying pattern the way bees can… In addition, he gently reminded us that: computers still don’t mate…

30 years later, Yann LeCunn explained that we are now approaching the cognition level of a rat. And yet, despite all such well-known facts, the snake oil salesman popped out in spades and started paddling the Autonomous Driving predictions being... just around the corner…

So, in 2016, as I saw billions of dollars going down the drain, I spoke about it in my LinkedIn post: “On AI, Autopilots & Self-Driving Cars… Respect The Bee!!!” – see: https://linkedin.com/pulse/ai-autopilots-self-driving-carsand-bee-question-oleg-feldgajer/

But I didn’t stop there, and as billions of wasted dollars turned into tens of billions - I wrote another post and asked all the Autonomous Driving cheerleaders dispensing endless “imagine-the-future” narratives: “Are you ready to send your kid on a school bus WITHOUT a driver?” – see: https://linkedin.com/pulse/you-ready-fly-your-wife-children-next-year-commercial-oleg-feldgajer/

And for those who were still salivating at the thought of self-driving cabs taking them back home from their favorite pub and a heavy binge-drinking, I posted:

“Self-driving Hype vs Self-parking Reality Or... Form vs Substance in SmartCities” -https://www.linkedin.com/pulse/self-driving-hype-vs-self-parking-reality-form-oleg-feldgajer/

My concern was that without restoring common sense - too much energy, time, and money would be wasted… It would be wasted by both: employers and employees of many progressive businesses. In some cases, such bad decisions will bring devastating consequences. So, I wrote a book: “AI BOOGEYMAN - Dispelling Fake News About Job Losses.”

You can find hard and soft cover copies on Amazon - see: AI Boogeyman.

My goal was quite simple: to dismiss an unprecedented onslaught of doom and gloom predictions - from the unique perspective of a seasoned AI practitioner.

Tesla’s AD In The News… Again

Lo and behold, a few days ago, https://mitechnews.com/auto-tech/elon-musk-admits-he-was-wrong-about-fully-autonomous-driving/ reported the following: “Elon Musk Admits He Was Wrong About Fully Autonomous Driving.”

The report said: “Tesla owners who expected to enjoy fully autonomous driving in their vehicles might be in for a disappointment. After years of promises, Elon Musk has finally admitted that models equipped with Hardware 3, which were sold as being ready for full self-driving, will need an upgrade. This announcement is not going over well—especially among those who paid thousands of dollars for the Full Self-Driving (FSD) package.

Since 2016, Elon Musk has repeatedly claimed that Tesla cars had the necessary hardware for full self-driving. However, during a call with shareholders, the company’s CEO dropped a bombshell: vehicles equipped with Hardware 3 won’t be enough. To run the self-driving software currently in development, an upgrade to Hardware 4 will be required.

“I think the most honest answer is that we’re going to have to upgrade the Hardware 3 computer for people who bought Full Self-Driving,” Musk admitted, adding that it will be “painful and difficult.” His statement quickly set off a firestorm on forums and social media, with many customers calling it a major reversal.

A Costly Upgrade, but Covered by Tesla

For those who paid $12,000 for the FSD package in the U.S., this announcement is a shock. Many believed they had purchased a vehicle ready for full autonomy, only to now find out their hardware is obsolete before the technology is even available.

The silver lining? Tesla will cover the cost of the upgrade to Hardware 4. This decision is likely driven as much by the desire to ease customer frustration as by the company’s need to avoid legal trouble. In fact, Tesla was previously sued for false advertising after promising an upgrade from Hardware 2 to Hardware 3.

But this move will come at an enormous cost to Tesla, which is already facing intensifying competition from Chinese automakers and struggling with financial pressures.

An Admission That Doesn’t Surprise Everyone

While this announcement has shocked Tesla owners, some industry watchers see it as a predictable outcome of the repeated delays in Full Self-Driving. Since 2016, Elon Musk has continuously promised that full autonomy was just around the corner—only to repeatedly push back the timeline.

In 2019, owners of Hardware 2 and 2.5 were required to upgrade to Hardware 3 to keep their hopes alive for future self-driving capabilities. At the time, Tesla was forced to offer the upgrade for free after being accused of misleading advertising.

Now, history seems to be repeating itself. And The looming question is: will today’s Hardware 4 owners face the same issue in a few years?

Full Self-Driving: A Technology That Remains Out of Reach

Beyond the controversy over hardware, one harsh truth remains: Full Self-Driving is still not available. Despite years of development and real-world testing, the final version of the software has yet to be released.”

And the author concludes: “Meanwhile, competitors are making progress. Waymo, Cruise, and Chinese firms like XPeng are pushing ahead in the race for autonomous vehicles, putting more pressure on Tesla. The ultimate question is whether Tesla will ever fulfill its promise of full autonomy. For now, customers who paid for the feature are still waiting, and many are running out of patience.”

Well, here is where I completely disagree with MITechnews.com. All the “progress claims” made by Waymo, Cruise, and Chinese firms like XPeng are not worth the paper they are printed on. You will find more details on the above in many of my previous AI my AI posts written over the last 9 years, and Most of the reasons I mostly wrote about referred to… the complex challenges linked to Cognition issues of AD. Today, I am focusing on the Quantitative challenges of AD…

System Level integration

System Level Integration includes factors such as:

1. Perception: Self-driving cars need to accurately perceive their environment using cameras, LIDAR, RADAR, and other sensors. They must identify and track objects, such as other vehicles, pedestrians, cyclists, and road signs. Ensuring accuracy in various weather conditions and lighting is particularly challenging.

2. Decision-making: Autonomous vehicles need to make real-time decisions based on the data they collect. This includes navigating intersections, merging onto highways, and responding to unpredictable behavior from other road users. Developing algorithms that can handle these scenarios safely and efficiently is a significant hurdle.

3. Localization and mapping: Self-driving cars must precisely know their location within a few centimeters. This requires detailed, high-resolution maps and the ability to update them in real-time. Achieving this level of accuracy is technically demanding.

4. Ethical and legal considerations: Autonomous driving raises numerous ethical questions, such as how to prioritize safety in situations where accidents are unavoidable. Additionally, there are regulatory and liability issues that need to be addressed before widespread deployment.

5. Human behavior: Humans are unpredictable, and self-driving cars must be able to anticipate and react to the actions of human drivers, pedestrians, and cyclists. This requires sophisticated machine learning models trained on vast amounts of data.

6. System integration: Building a reliable and robust autonomous vehicle system requires integrating numerous hardware and software components, which must work seamlessly together. Ensuring the system's reliability and safety under various conditions is a significant engineering challenge.

The Perception Challenge

Perception is a fundamental aspect of autonomous driving, as it allows the vehicle to understand its surroundings and make informed decisions. Here are some key components of perception in autonomous vehicles:

• Sensors

Autonomous vehicles rely on a variety of sensors to collect data about their environment. The primary sensors used include:

o Cameras: Provide high-resolution images and video, helping the vehicle detect and classify objects, read road signs, and recognize traffic signals.

o LIDAR (Light Detection and Ranging): Uses laser pulses to create detailed 3D maps of the surroundings. LIDAR is particularly useful for detecting and tracking objects, as well as measuring distances accurately.

o RADAR (Radio Detection and Ranging): Emits radio waves to detect objects and measure their speed and distance. RADAR is less affected by weather conditions and can penetrate fog, rain, and dust.

o Ultrasonic Sensors: Typically used for short-range detection, such as parking assistance and detecting obstacles close to the vehicle.

· Object Detection and Classification

The data collected by the sensors must be processed to identify and classify objects in the environment. This involves using machine learning algorithms and computer vision techniques to recognize various objects, such as:

· Vehicles (cars, trucks, bicycles, etc.)

· Pedestrians

· Road signs and traffic signals

· Lane markings and road boundaries

· Object Tracking

Once objects are detected and classified, they must be tracked over time to understand their movement and predict their future positions. This is crucial for making safe driving decisions, such as when to change lanes or stop for pedestrians.

· Sensor Fusion

To achieve a comprehensive understanding of the environment, data from multiple sensors is combined using sensor fusion techniques. By integrating information from cameras, LIDAR, RADAR, and other sensors, the vehicle can create a more accurate and reliable perception of its surroundings.

· Environmental Understanding

Beyond detecting and tracking individual objects, autonomous vehicles need to understand the overall context of their environment. This includes recognizing road conditions, construction zones, and other dynamic elements that could impact driving decisions.

· Real-time Processing

Perception data must be processed in real time to enable timely decision-making. This requires powerful computing hardware and efficient algorithms to ensure that the vehicle can respond quickly to changing conditions.

The combination of these components allows autonomous vehicles to perceive their environment accurately and make safe, informed decisions while navigating. The continuous advancements in sensor technology, machine learning, and computer vision are driving the progress of perception systems in autonomous vehicles

Perception systems in autonomous vehicles have made significant strides, but they still face several limitations:

1. Weather Conditions: Adverse weather conditions such as heavy rain, snow, fog, and glare from the sun can degrade the performance of sensors like cameras and LIDAR. This can result in reduced accuracy and reliability in object detection and tracking.

2. Sensor Range and Resolution: Each type of sensor has its own limitations in terms of range and resolution. For example, cameras may have limited night vision capabilities, while LIDAR may have difficulty detecting objects at greater distances. This can impact the vehicle's ability to perceive its environment accurately.

3. Occlusions: Objects that are partially or fully occluded by other objects can be challenging to detect and track. For example, a pedestrian hidden behind a parked car might not be visible to the vehicle's sensors until they step into the road.

4. Dynamic Environments: Autonomous vehicles must navigate constantly changing environments with unpredictable elements, such as pedestrians, cyclists, and other vehicles. This requires real-time processing and quick decision-making, which can be difficult to achieve consistently.

5. Complex Scenarios: Certain scenarios, such as construction zones, emergency vehicles, and unusual road layouts, can be difficult for perception systems to interpret accurately. These situations often require a high level of contextual understanding and adaptability.

6. Calibration and Maintenance: Sensors need to be precisely calibrated and maintained to ensure optimal performance. Misaligned or dirty sensors can lead to inaccurate data and compromised perception.

7. Computational Power: Real-time processing of large amounts of sensor data requires significant computational power. Ensuring that the vehicle's hardware can handle these demands without overheating or consuming too much energy is a challenge.

8. Ethical and Legal Concerns: Perception systems must be designed to make ethical decisions in complex scenarios. This includes prioritizing the safety of different road users and complying with varying traffic laws and regulations across regions.

Addressing these limitations requires ongoing advancements in sensor technology, machine learning algorithms, and system integration. Researchers and engineers are continually working to improve the robustness and reliability of perception systems to ensure the safe and effective deployment of autonomous vehicles.

The Decision-Making Challenge

AD decision-making involves several critical processes to ensure safe and efficient navigation. Here's a breakdown of how decision-making works:

1. Perception:

Sensor Data Collection: The vehicle collects data from various sensors (cameras, LiDAR, radar, etc.) to perceive its surroundings.

Object Detection and Classification: The data is processed to identify and classify objects like pedestrians, vehicles, traffic signs, and road markings.

2. Localization and Mapping:

Position Estimation: The vehicle uses GPS and high-definition maps to determine its precise location on the road.

Environment Mapping: It creates a detailed map of its immediate surroundings, updating it in real-time.

3. Path Planning:

Route Planning: The vehicle calculates an optimal route to reach its destination, considering traffic, road conditions, and legal constraints.

Trajectory Planning: It determines the best path to follow within the route, avoiding obstacles and ensuring smooth motion.

4. Behavior Prediction:

Predicting Movements: The vehicle predicts the behavior of other road users (e.g., whether a pedestrian will cross the street or a car will change lanes).

Risk Assessment: It assesses potential risks and decides on the best course of action to avoid collisions.

5. Action Selection:

Based on the above inputs, the vehicle selects the most appropriate action, such as accelerating, braking, or changing lanes.

Motion Control: It executes the selected actions through precise control of the vehicle's throttle, brakes, and steering.

Feedback Loop: The vehicle continuously monitors its environment and adjusts its actions as necessary to respond to dynamic changes.

Example Scenarios

Imagine an autonomous vehicle approaching a busy intersection. Here's how it might make decisions:

Scenario 1: Pedestrian Crossing

Perception: The vehicle's sensors detect the pedestrian and classify them as a high-priority object.

Prediction: The vehicle predicts that the pedestrian is likely to cross the street.

Decision: The vehicle decides to slow down and come to a stop to allow the pedestrian to cross safely.

Action: It applies the brakes smoothly and stops at the crosswalk.

Monitoring: The vehicle continuously monitors the pedestrian's movement and waits until they have safely crossed before proceeding.

Scenario 2: Merging Onto A Highway

Perception: The vehicle detects other vehicles on the highway and assesses their speeds and positions.

Prediction: It predicts the future positions of the vehicles to find a safe gap for merging.

Decision: The vehicle decides to accelerate or decelerate to match the speed of the traffic and align with the gap.

Action: It adjusts the throttle and steering to merge smoothly into the traffic flow.

Monitoring: The vehicle continuously monitors surrounding traffic and adjusts its speed and position as needed.

Scenario 3: Obstacle Avoidance

Perception: The vehicle's sensors detect the obstacle and classify it as a hazard.

Prediction: The vehicle predicts the possible paths around the obstacle and assesses the safety of each path.

Decision: The vehicle decides to steer around the obstacle while maintaining a safe distance from other road users.

Action: It adjusts the steering and speed to maneuver around the branch safely.

Monitoring: The vehicle continuously monitors the new path and surrounding environment to ensure it remains clear.

Scenario 4: Emergency Vehicle Encounter

Perception: The vehicle's sensors detect the siren sound and identify the direction of the emergency vehicle.

Prediction: It predicts the path of the emergency vehicle and assesses the best way to yield.

Decision: The vehicle decides to pull over to the side of the road to allow the emergency vehicle to pass.

Action: It signals and safely steers to the roadside, slowing down or stopping as necessary.

Monitoring: The vehicle monitors the emergency vehicle's passage and resumes its journey once it has safely passed.

Additional System-Level Integration Challenges

As previously explained, AD Systems incorporate hundreds of CPUs and s/w modules - including sensors, actuators, complex algorithms, ML systems, radars, LIDARs, video cameras, etc., etc. And even if each of such sub-systems is 99% accurate - the 100+ of them will drop the overall system accuracy to less than… 37%”.

Needless to say, a 37% success probability is less than 50% heads/tails probability while flipping a coin. So, your chances of getting into an accident are quite… random.

So, when can we finally begin to trust AD? My answer is quite simple: when the AD PROCESSES start adhering to other Quality Management standards – complying with Six Sigma certifications…

Six Sigma is a process improvement strategy that improves Output quality by reducing Defects. It originated in the 1980s. Six Sigma is named after a statistical concept where a process only produces 3.4 defects per million opportunities (DPMO). Six Sigma can therefore be also thought of as a goal, where processes not only encounter less defects, but do so consistently (low variability).

Six Sigma dictates that the margin of error can be up to six standard deviations from the mean. This results in a 99.999997% rate of accuracy, maximizing efficiency and reducing defects. This high percentage of accuracy makes Six Sigma an essential metric for measuring and controlling product quality.

Of course, the AD pundits will tell you right away that AD cars get into fewer accidents than human drivers do. But it is all relative, not absolute, and here is why: if humans drive a car 100,000,000 times each day and 10,000 accidents are reported – we are talking about 0.0001%... However, if only 1000 AD trips are taking place and there are 10 accidents reported, we are talking about 0.01% - which is 100X greater!

Accuracy, Precision, and Recall in AD

According to Encord: “In Machine Learning, the efficacy of a model is not just about its ability to make predictions but also to make the right ones. Practitioners use evaluation metrics to understand how well a model performs its intended task. They serve as a compass in the complex landscape of model performance. Accuracy, precision, and recall are important metrics that view the model's predictive capabilities.

Accuracy is the measure of a model's overall correctness across all classes. The most intuitive metric is the proportion of true results in the total pool. True results include true positives and true negatives. Accuracy may be insufficient in situations with imbalanced classes or different error costs.

Precision and recall address this gap. Precision measures how often predictions for the positive class are correct. Recall measures how well the model finds all positive instances in the dataset. To make informed decisions about improving and using a model, it's important to understand these metrics.

Understanding the difference between accuracy, precision, and recall is important in real-life situations. Each metric shows a different aspect of the model's performance.

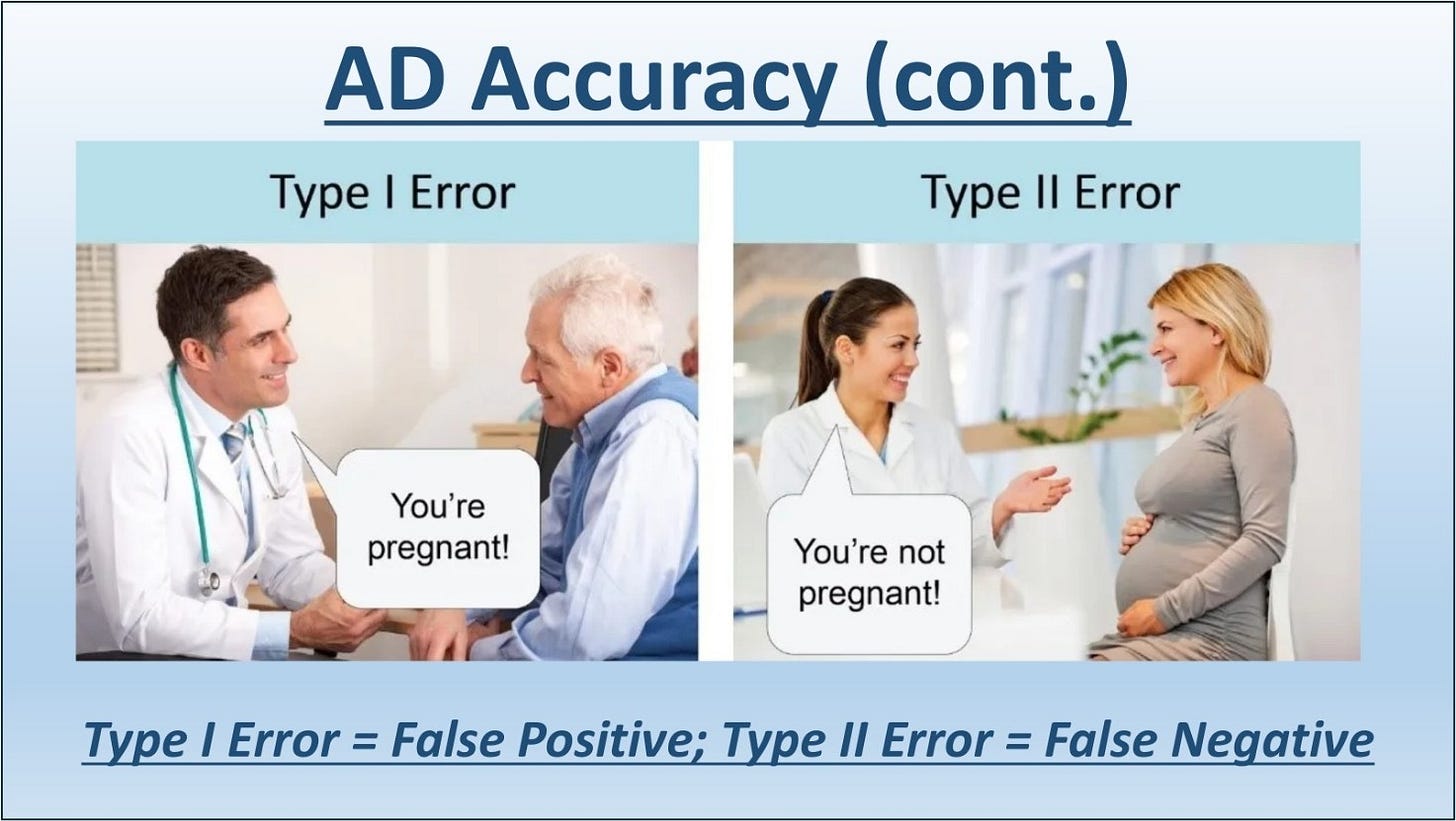

The Confusion Matrix

The confusion matrix shows how well the model performs. Data scientists and machine learning practitioners can assess their models' accuracy and areas for improvement with a visual representation.

Let's break down how accuracy, precision, and recall relate to true positives, false positives, true negatives, and false negatives in the context of classification problems:

Accuracy

Accuracy measures the overall correctness of the model. It's the ratio of correctly predicted instances (both positive and negative) to the total instances.

Example: If you have 100 tests, and 90 of them are correctly identified (both positives and negatives), the accuracy is 90%.

Precision

Precision measures the correctness of positive predictions. It's the ratio of true positive predictions to all positive predictions (both true and false).

Example: If the test identifies 20 people as having the disease (true positive + false positive), and 15 of these actually have the disease (true positive), the precision is 75%.

Recall (Sensitivity)

Recall measures the model's ability to identify all actual positive instances. It's the ratio of true positive predictions to all actual positive instances (both true positives and false negatives).

Example: If 25 people actually have the disease, and the test correctly identifies 20 of them (true positive), the recall is 80%.

A model with high precision but low recall might be very confident in the positive predictions it makes, but it might miss a lot of actual positives. On the other hand, a model with high recall but low precision might catch most of the positives, but with a lot of false alarms.

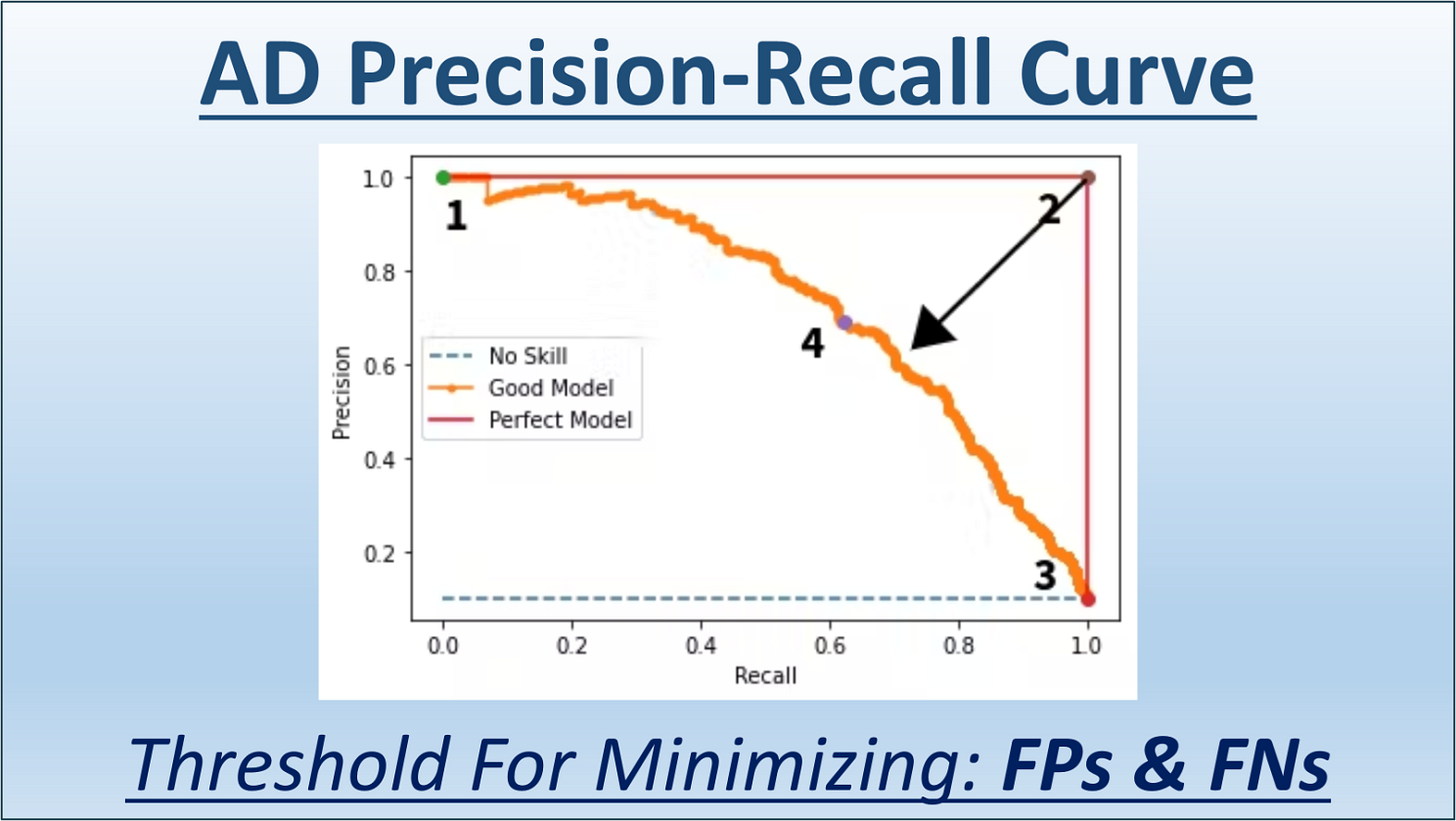

Precision-Recall Curve

Precision and recall, two commonly used metrics in classification, often present a trade-off that requires careful consideration based on the specific application and its requirements.

There's an inherent trade-off between precision and recall. Improving precision often comes at the expense of recall and vice versa. For instance, a model that predicts only the most certain positive cases will have high precision but may miss out on many actual positive cases, leading to low recall.

The precision-recall curve is a graphical representation that showcases the relationship between precision and recalls for different threshold settings. It is especially valuable for imbalanced datasets where one class is significantly underrepresented compared to others. In these scenarios, traditional metrics like accuracy can be misleading, as they might reflect the predominance of the majority class rather than the model's ability to identify the minority class correctly.

The precision-recall curve measures how well the minority class is predicted. The measurement checks how accurately we make positive predictions and detect actual positives. The curve is an important tool for assessing model performance in imbalanced datasets. It helps choose an optimal threshold that balances precision and recall effectively.

The closer this curve approaches the top-right corner of the graph, the more capable the model is at achieving high precision and recall simultaneously, indicating a robust performance in distinguishing between classes, regardless of their frequency in the dataset.

So, until AD systems can clearly demonstrate such a curve on previously unseen billion-cases test sets and deliver Six Sigma results, I will pass… And do not rush to upgrade your hardware either. We are not there yet…

In Summary

For all the remarkable recent progress in AI research, we are still very far from creating machines that think and learn as well as people do. As Meta AI’s Chief AI Scientist Yann LeCun notes: “A teenager who has never sat behind a steering wheel can learn to drive in about 20 hours, while the best autonomous driving systems today need millions or billions of pieces of labeled training data and millions of reinforcement learning trials in virtual environments. And even then, they fall short of human’s ability to drive a car reliably.”

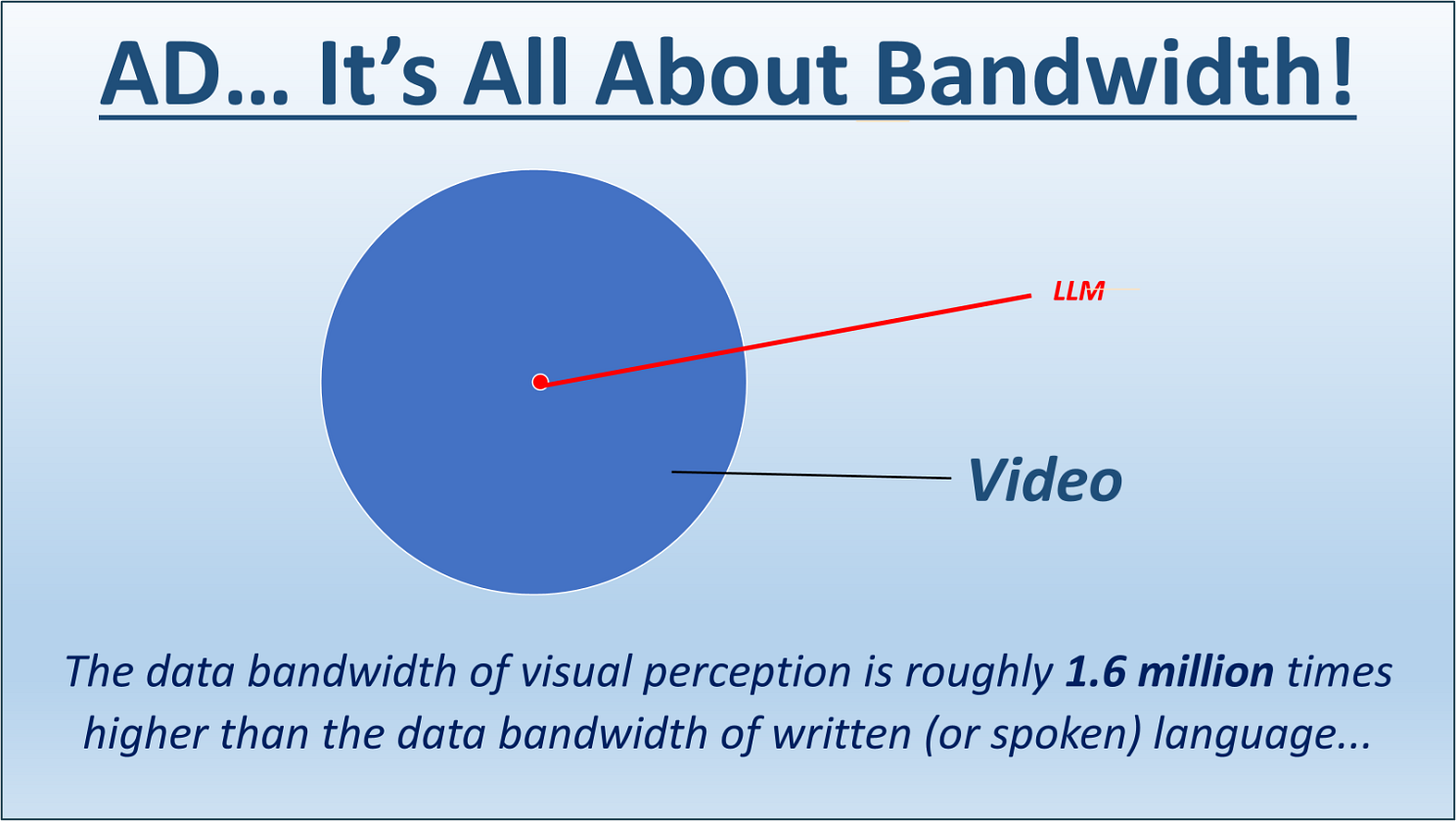

* Language is low bandwidth: less than 12 bytes/second. A person can read 270 words/minutes, or 4.5 words/second, which is 12 bytes/s (assuming 2 bytes per token and 0.75 words per token). A modern LLM is typically trained with 1x10^13 two-byte tokens, which is 2x10^13 bytes. This would take about 100,000 years for a person to read (at 12 hours a day).

* Vision is much higher bandwidth: about 20MB/s. Each of the two optical nerves has 1 million nerve fibers, each carrying about 10 bytes per second. A 4 year-old child has been awake a total 16,000 hours, which translates into 1x10^15 bytes. In other words:

The data bandwidth of visual perception is roughly 1.6 million times higher than the data bandwidth of written (or spoken) language.

In a mere 4 years, a child has seen 50 times more data than the biggest LLMs trained on all the text publicly available on the internet. This tells us three things:

1. Yes, text is redundant, and visual signals in the optical nerves are even more redundant (despite being 100x compressed versions of the photoreceptor outputs in the retina). But redundancy in data is precisely what we need for Self-Supervised Learning to capture the structure of the data. The more redundancy, the better for SSL.

2. Most of human knowledge (and almost all of animal knowledge) comes from our sensory experience of the physical world. Language is the icing on the cake. We need the cake to support the icing.

3. There is absolutely no way in hell we will ever reach human-level AI without getting machines to learn from high-bandwidth sensory inputs, such as vision.

Yes, humans can get smart without vision, even pretty smart without vision and audition. But not without touch. Touch is pretty high bandwidth, too.”

So, when do I think AD will finally work reliably? My answer is quite simple… Only when ALL the vehicles are fully connected to the cloud using Starlink/SpaceX satellite connection, the AD will work reliably, as intended. And only Elon Musk may pull it off… much faster than you think.

Just think about it… All the new cars already have satellite connections in place for emergency purposes. And all the new phones have similar access to Starlink satellites. So, essentially, all the phone-carrying pedestrians and bicycle/bike riders are connected to the satellites, too, making a comprehensive geo-fencing… possible. What remains is to collect all such information and incorporate it into reliable Autonomous Driving networks. Now, the proverbial “Are we there yet?” question… has a different meaning, doesn’t it…

So, yes, Elon Musk has the right “key” to the gates of Autonomous Driving Prosperity. But there are many gates to try, and I advise him to stay away from the false ones…

Until then, the cost and complexities of brute-force embedding AD into the vehicles will make your favorite SUV look like a Russian tank from WWII...

For More Information

Please see my other posts on Linkedin, Twitter, Substack, and CGE’s website.

AI Boogeyman

You can also find additional info in my hardcover and paperback books published on Amazon: “AI Boogeyman – Dispelling Fake News About Job Losses” and on our YouTube Studio channel…

SmartAB™ - SUBSCRIBE NOW

A Radically Innovative Advisory Board Subscription Services